Paper: Taming Polysemanticity in LLMs: Provable Feature Recovery via Sparse Autoencoders

AUTHORS: Siyu Chen*, Heejune Sheen*, Xuyuan Xiong†, Tianhao Wang§, and Zhuoran Yang*

AFFILIATIONS.

*Department of Statistics and Data Science, Yale University

†Antai College of Economics and Management, Shanghai Jiao Tong University

§Toyota Technological Institute at Chicago

LINKS.

ArXiv: arxiv.org/abs/

Abstract

We address the challenge of theoretically grounded feature recovery using Sparse Autoencoders (SAEs) for interpreting Large Language Models. Current SAE training methods lack mathematical guarantees and face issues like hyperparameter sensitivity. We propose a statistical framework for feature recovery that models polysemantic features as sparse mixtures of monosemantic concepts. Based on this, we develop a “bias adaptation” SAE training algorithm that dynamically adjusts network biases for optimal sparsity. We prove that this algorithm correctly recovers all monosemantic features under our statistical model. Our improved variant, Group Bias Adaptation (GBA), outperforms existing methods on LLMs up to 1.5 billion parameters in terms of sparsity-loss trade-off and feature consistency. This work provides the first SAE algorithm with theoretical recovery guarantees, advancing interpretable and trustworthy AI through enhanced mechanistic understanding.

Key Contributions

A novel statistical framework that rigorously formalizes feature recovery by modeling polysemantic features as sparse combinations of underlying monosemantic concepts, and establishes a precise notion of feature identifiability.

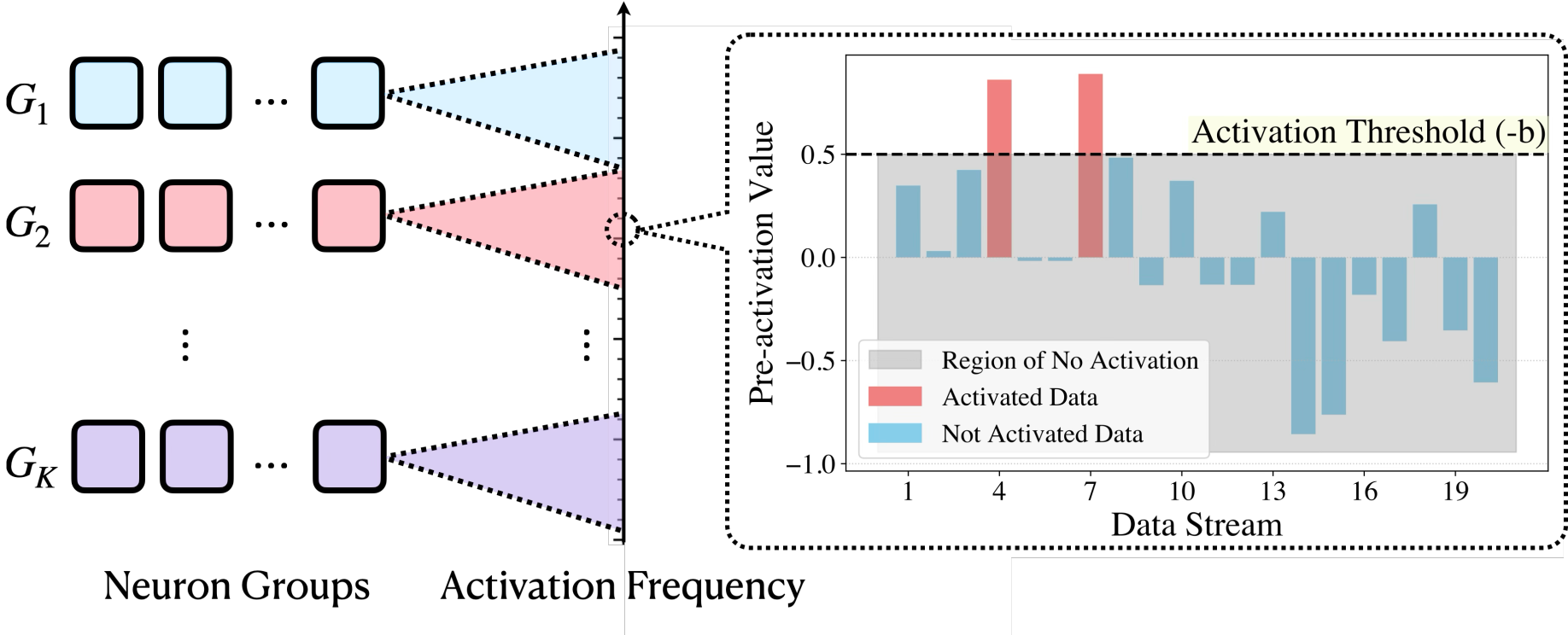

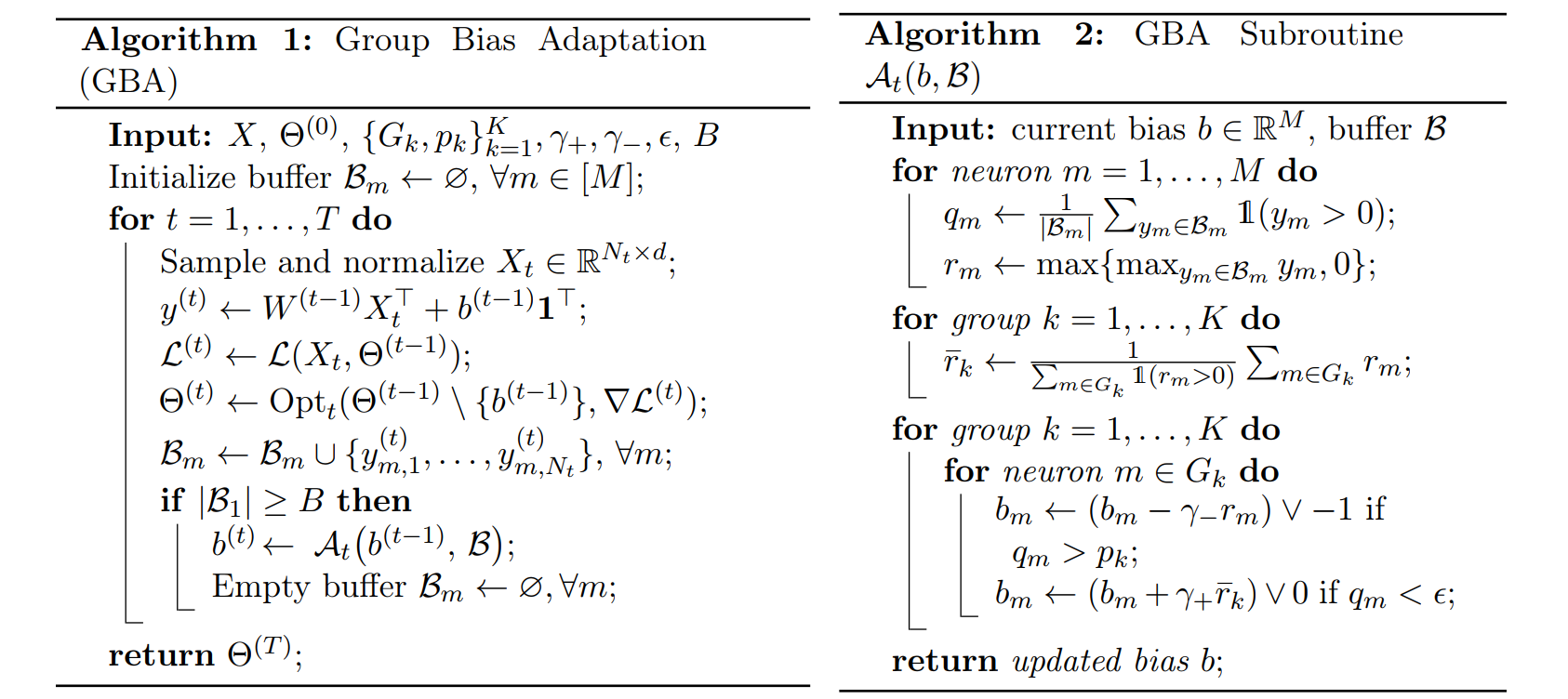

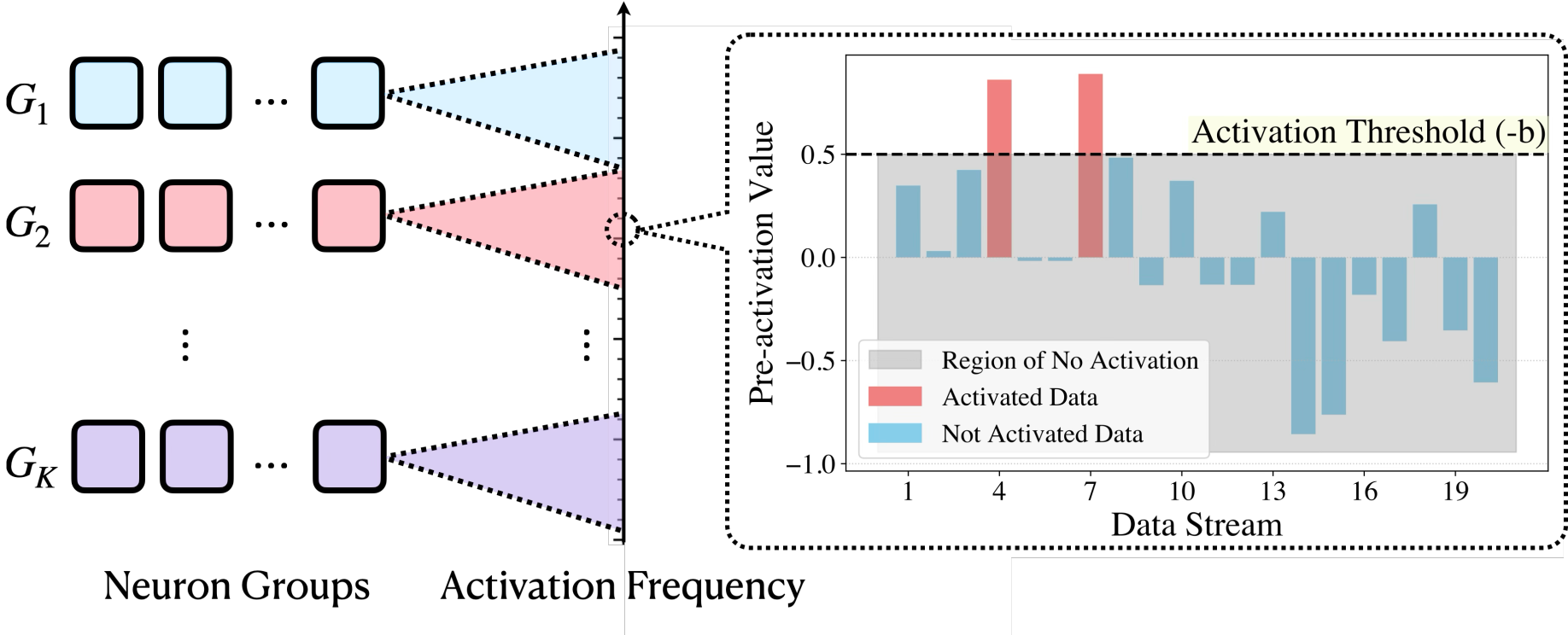

An innovative SAE training algorithm, Group Bias Adaptation (GBA), which adaptively adjusts neural network bias parameters to enforce optimal activation sparsity, allowing distinct groups of neurons to target different activation frequencies.

The first theoretical guarantee proving that SAE training algorithm can provably recover all monosemantic features when the input data is sampled from our proposed statistical model.

Results

Algorithm Overview

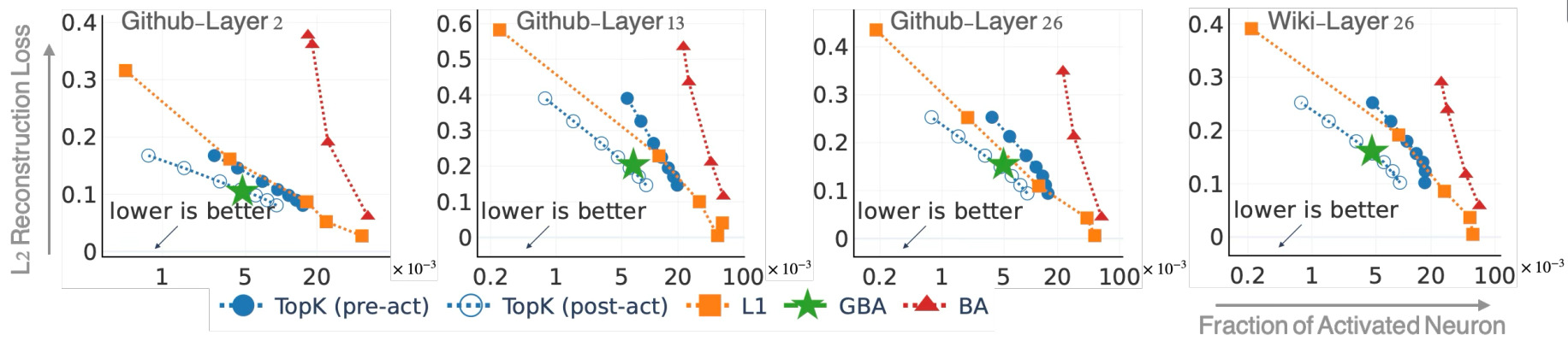

Performance Comparison

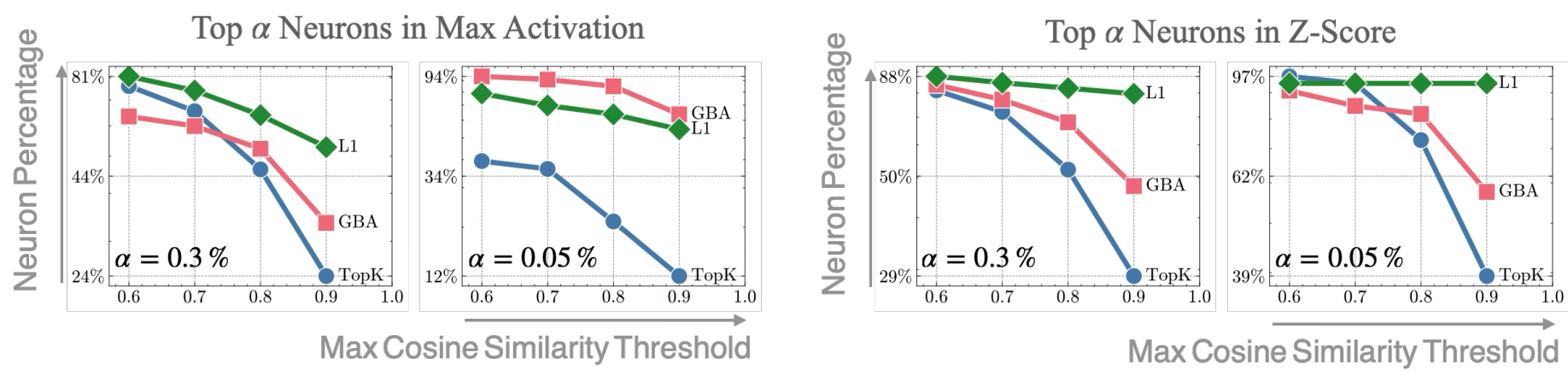

Feature Consistency

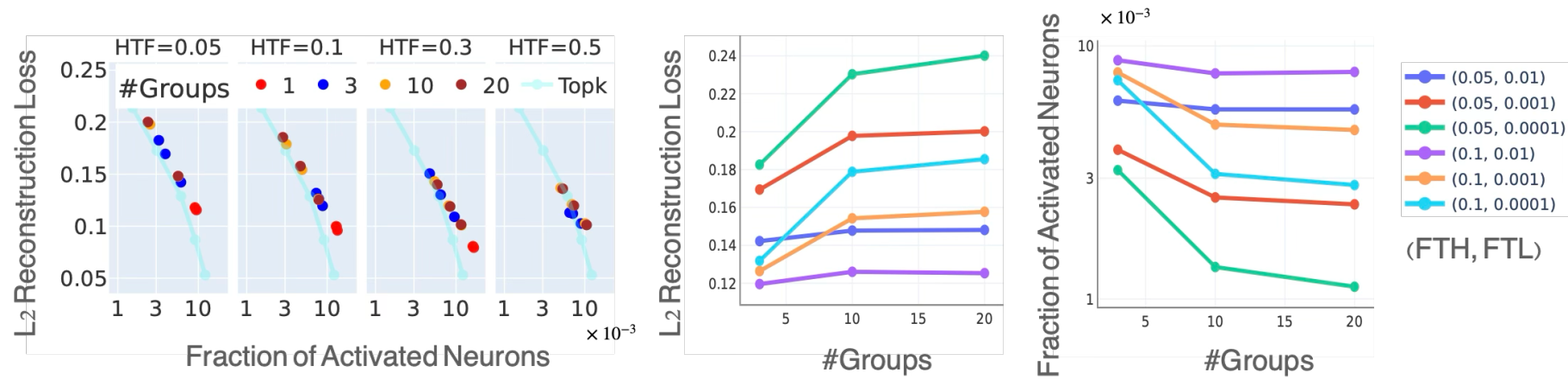

Group Ablation Study

Bias Adaptation Illustration

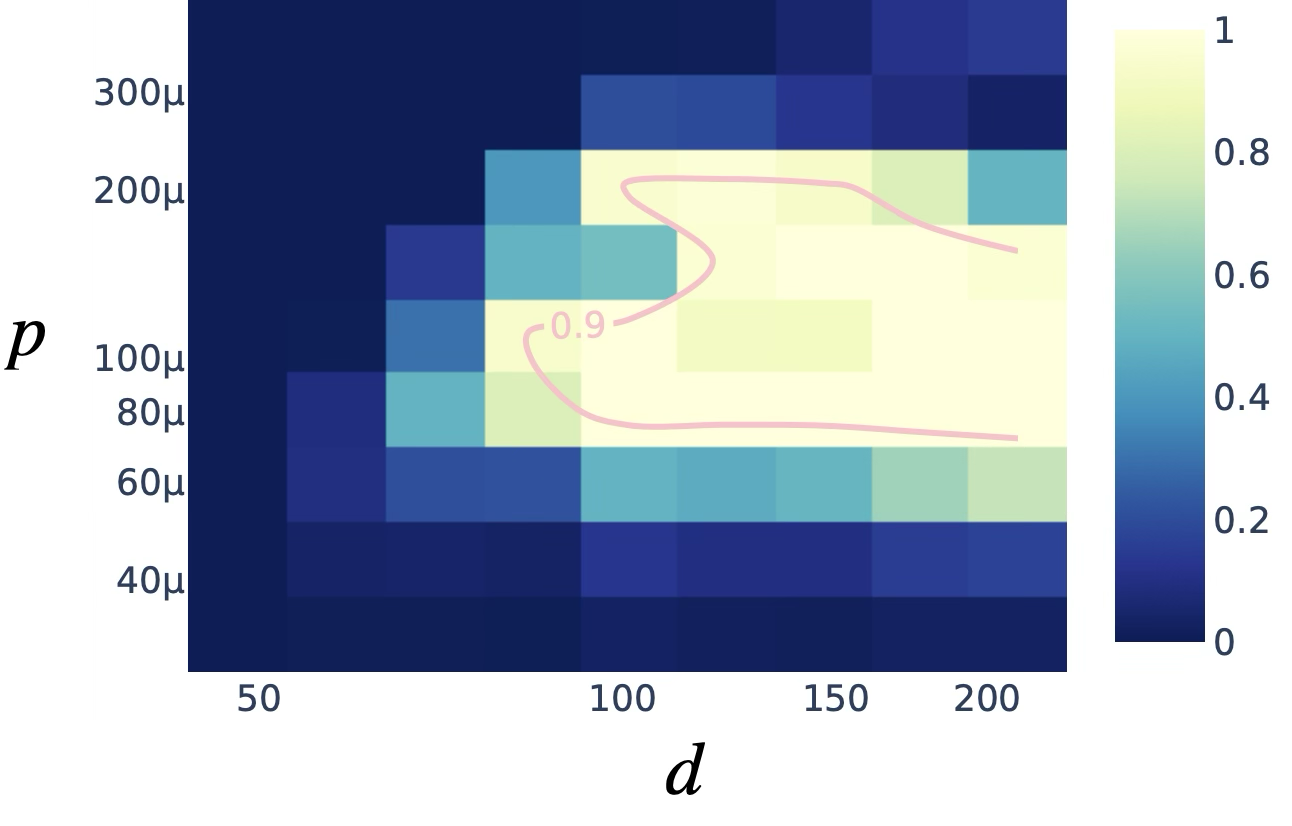

Selectivity Analysis

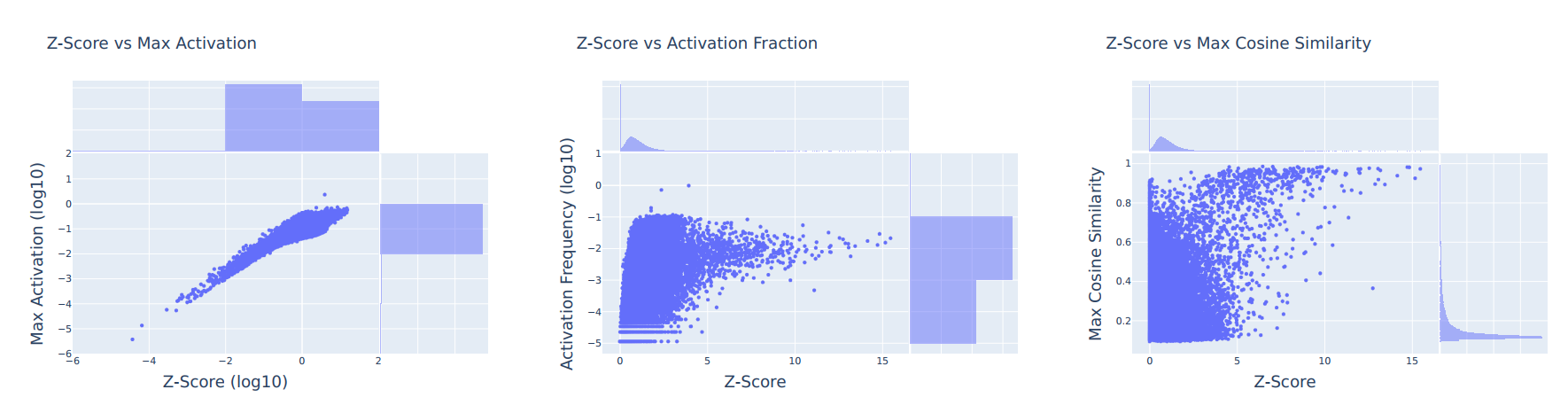

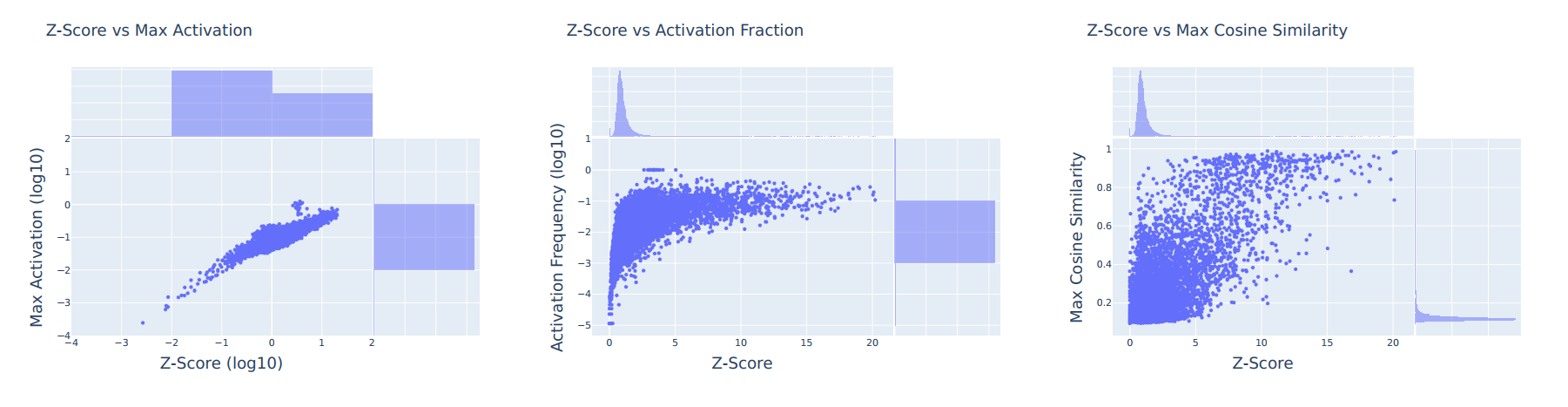

Z-Score Analysis